M I r N s

March 13 - Aug 1, 2021

All sorts of things in this world behave like mirrors (Lacan)

This exhibition emerged from a rich period of enquiry around equity and diversity. The question was raised, how or can a diverse audience actually be reflected through technology-based works in the contested space of an art gallery? This interactive exhibition was put together as a way to explore how machines literally reflect, interpret and ‘see’ a diversity of visitors to the gallery. Human interaction with the works further reveals how we perceive and interpret the actions, behaviour and output of these mirroring machines. In this liminal space, a multiplicity of ephemeral portraits and self-portraits are generated and flow; saved to machinic and biologic memory. Through interactive robotics, neural networks, algorithms, coding, artificial intelligence, micro controllers, proximity & motion sensors and facial recognition these mirroring machines reflect and expose human diversity in a world of technology.

MirNs refers to Mirror Neurons, of intense interest in biology, psychology and physiology as well as robotics, AI, and coding. Scientists theorize that the mirroring nature of MirNs generate imitative behaviours, resulting in empathy and the capability for aesthetic experience. It has been stated that Mirror Neurons are responsible for making us human.

The question of what will continue to hold value in a world of technology is becoming increasingly important. The reflection of what is human remains an exemplar…but is that changing? As the boundaries between biology and technology become more and more blurred how will the reflection of what is human change the world we live in? How will humanity be reflected in the future? .

Curation + Design: Sarah Joyce + Gordon Duggan

Mario Klingemann

Uncanny Mirror (2018)

Created by a pioneer in AI (artificial intelligence), the Uncanny Mirror creates shifting, real-time AI portraits of each viewer. It constantly mirrors, analyses, builds and morphs each face it sees from all the faces it has ever seen. In the case of the New Media Gallery installation the cache of faces have been captured in exhibitions at Seoul, Basel, Munich, Vevey/Switzerland, St Petersburg and at New Media Gallery. Questions around the nature of facial recognition and determinations around audience diversity and racial discrimination thus become an integral and important aspect of this work. The work contains a built-in camera that captures viewers multiple times. Facial-Recognition technology is employed. Each new portrait draws on the accumulated knowledge of the machine; each portrait produced contains something of those who came before. Once a face is recognized, selected biometric face markers are extracted together with a rough idea of pose and hand movements; the more it sees the more it learns. It takes the input, makes a sketch and then passes this information on to the next model, which takes the sketch and creates the image seen in the ‘mirror’. The more it sees a particular face, the faster it recognizes and reflects that face. When nobody is in front of the mirror it learns and it dreams. What the viewer then observes is a flow of colour and digital movement containing shifting and abstracted face parts, hair, apparel and surroundings.

Image

Biography

Mario Klingemann is a German artist known for his work involving neural networks, code, and algorithms and recognized as a pioneer in the field of Artificial Intelligence (AI) art. He is considered a pioneer in the use of computer learning in the arts. He uses algorithms and artificial intelligence to create and investigate systems an is particularly interested in the human perception of art and creativity, researching methods in which machines can augment or emulate these processes. His wide-ranging artistic research spans generative art, cybernetic aesthetics, information theory, feedback loops, pattern recognition, emergent behaviours, neural networks, cultural heritage data and storytelling. He is artist in residence at the Google Arts & Culture Lab, winner of the Lumen Prize Gold 2018, the British Library Labs Creative Award. His work has been featured in art publications as well as academic research and has been shown worldwide in international museums and at art festivals like Ars Electronica, ZKM, the Photographers' Gallery, Collección Solo Madrid, Nature Morte Gallery New Delhi, Residenzschloß Dresden, Grey Area Foundation, Mediacity Biennale Seoul, the British Library, MoMA, and the Centre Pompidou.

Mario Klingemann:

“My preferred tools are neural networks, code and algorithms. My interests are manifold and in constant evolution, involving artificial intelligence, deep learning, generative and evolutionary art, glitch art, data classification and visualization or robotic installations. If there is one common denominator it’s my desire to understand, question and subvert the inner workings of systems of any kind. I also have a deep interest in human perception and aesthetic theory. Since I taught myself programming in the early 1980s, I have been trying to create algorithms that are able to surprise and to show almost autonomous creative behavior. The recent advancements in artificial intelligence, deep learning and data analysis make me confident that in the near future “machine artists” will be able to create more interesting work than humans. GANs, short for generative adversarial networks, are a particular architecture of deep neural networks which turned out to be very effective at learning how to generate new images based on a set of training examples. They are not the only way to create images with artificial intelligence, but many artists are using them because they are relatively easy to train and at the same time very versatile tools. The principle how they work has been explained many times by now, so I will not explain them in detail. The basic idea is that you have two neural networks, one, the generator tries to make images that look similar to the training examples it has been given. The other one, the discriminator, tries to learn to distinguish real images, like the ones from the training set from fake images, the ones that the generator makes. Initially both networks know nothing about the task they have to do and produce very unconvincing results, but every time one of the models makes a mistake, like when the generator gets caught with a fake or the discriminator lets a fake image pass, they learn from that and slightly improve their methods. Over time this back and forth between them makes both models become very good at their tasks so that eventually we as the human discriminators might not be able to distinguish a real image from a fake one anymore. My long-term goal is to find out how much autonomy I can give a machine and how far I can remove myself from this process. Apart from that I try not to get bored and to satisfy my curiosity about how the world might work and where it is heading.”

Mario Klingemann:

“My preferred tools are neural networks, code and algorithms. My interests are manifold and in constant evolution, involving artificial intelligence, deep learning, generative and evolutionary art, glitch art, data classification and visualization or robotic installations. If there is one common denominator it’s my desire to understand, question and subvert the inner workings of systems of any kind. I also have a deep interest in human perception and aesthetic theory. Since I taught myself programming in the early 1980s, I have been trying to create algorithms that are able to surprise and to show almost autonomous creative behavior. The recent advancements in artificial intelligence, deep learning and data analysis make me confident that in the near future “machine artists” will be able to create more interesting work than humans. GANs, short for generative adversarial networks, are a particular architecture of deep neural networks which turned out to be very effective at learning how to generate new images based on a set of training examples. They are not the only way to create images with artificial intelligence, but many artists are using them because they are relatively easy to train and at the same time very versatile tools. The principle how they work has been explained many times by now, so I will not explain them in detail. The basic idea is that you have two neural networks, one, the generator tries to make images that look similar to the training examples it has been given. The other one, the discriminator, tries to learn to distinguish real images, like the ones from the training set from fake images, the ones that the generator makes. Initially both networks know nothing about the task they have to do and produce very unconvincing results, but every time one of the models makes a mistake, like when the generator gets caught with a fake or the discriminator lets a fake image pass, they learn from that and slightly improve their methods. Over time this back and forth between them makes both models become very good at their tasks so that eventually we as the human discriminators might not be able to distinguish a real image from a fake one anymore. My long-term goal is to find out how much autonomy I can give a machine and how far I can remove myself from this process. Apart from that I try not to get bored and to satisfy my curiosity about how the world might work and where it is heading.”

Credits

Uncanny Mirror

2018

Computer. 82" 4k Monitor, Camera

2018

Computer. 82" 4k Monitor, Camera

Random International

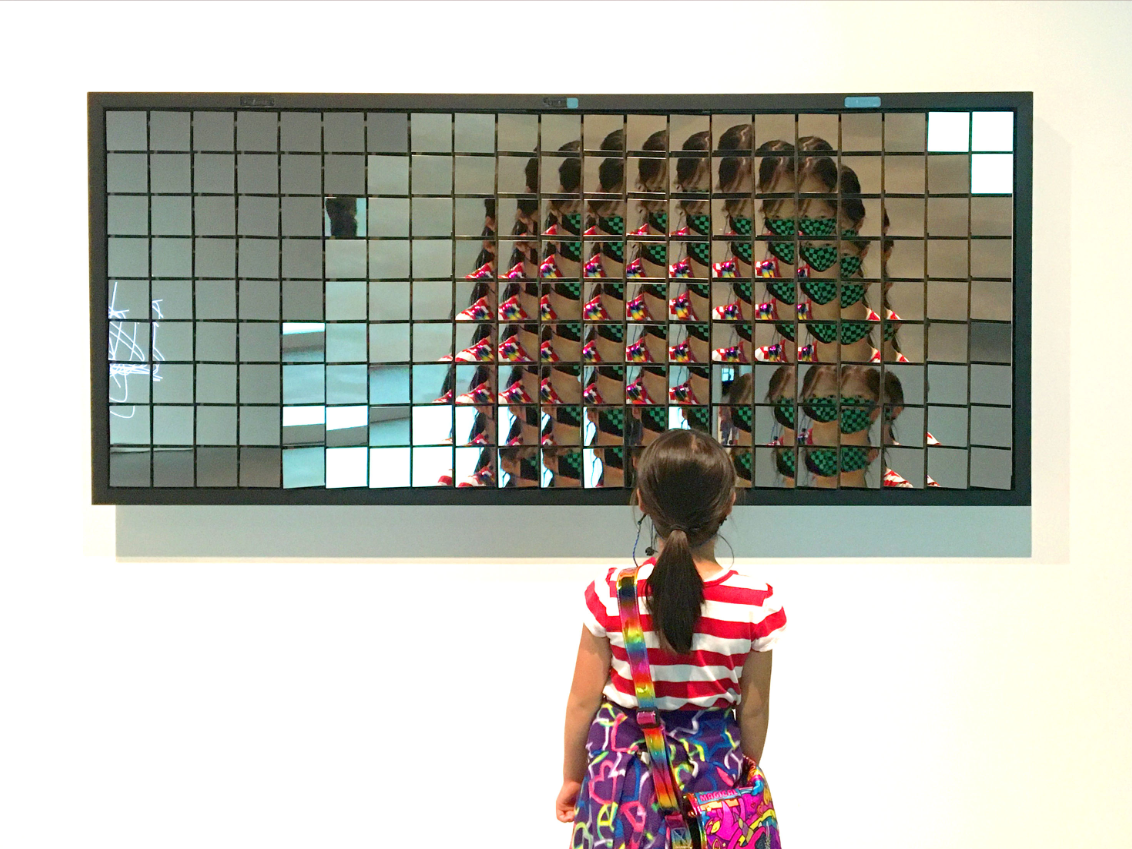

Fragments (2016)

A segmented, mosaic mirror with facial recognition software that identifies and follows each viewer. Each of the 189 mirrors robotically tilt selected squares toward the viewer. The piece is mounted on a kinetic panel installation made up of numerous mirrored squares that register your position and follow your movements. When you stand in the front of the mirror, sensors on each square tilt the surface of the individual panels so that they are facing the viewer. The squares only react if they register your presence, so only a partial section of the larger mirror moves, creating a compound eye effect. As the mirrored squares activate an impression is made in the grid, as though the subject is physically impacting the grid like a splash or a crater or a divot. Conversely, one could interpret the mirrors as wrapping around their subject. Either way, the reflection and the reflected perform a kind of interactive, performative dance. Mirrors fan out in a wave of motion, following each viewer. All the tiny motors can be heard, like an organic swarm of bees. If the subject moves a little and stops, the mirrors and motors adjust accordingly and stop. In this way, the observer’s motion, like their reflection, is also mirrored. The work has been interpreted as a commentary on vanity or surveillance but the artists connect it more with the relationship between man and machine and one’s identity in the digital age. As digital fragments of our identity are captured through our phones, surveillance cameras, and internet posts, the issue of who really owns these fragments is yet to be resolved.

Image

Biography

Art Group RANDOM INTERNATIONAL run a collaborative studio for experimental practice within contemporary art. Founded in 2005 by Hannes Koch and Florian Ortkrass, today they work with larger teams of diverse and complementary talent out of studios in London and Berlin. Questioning aspects of identity and autonomy in the post-digital age, the group’s work invites active participation. RANDOM INTERNATIONAL explores the human condition in an increasingly mechanised world through emotional yet physically intense experiences. The artists aim to prototype possible behavioural environments by experimenting with different notions of consciousness, perception, and instinct. Random International was founded by Florian Ortkrass born 1975, Rheda-Wiedenbrück,Germany. Graduated from Brunel University in 2002 and Royal College of Art in 2005 and Hannes Koch born 1975, Hamburg Germany. Graduated from Brunel University in 2002 and Royal College of Art in 2004. The collective has exhibited, and created public projects and installations worldwide.

Credits

Random International

Fragments

2016

Stainless Steel Mirrors, Aluminium, High-Density Fibre Board, Motors, Camera, Computer

225 x 101 x 11 cm

Fragments

2016

Stainless Steel Mirrors, Aluminium, High-Density Fibre Board, Motors, Camera, Computer

225 x 101 x 11 cm

Louis-Philippe Rondeau

Liminal (2018)

An installation that mirrors and distorts the behaviour and appearance of the viewer through slit scan technology.

This interactive installation employs a photographic process called slit-scan to spread out time in space. It encourages the viewer to perform in the space of the gallery and sets up a performance space. Viewers step back and forth through a vertical ring (or portal) of light, 2.75 meters in diameter. A black-and-white mirror image of each viewer is projected onto a wall. As viewers move through the ring, they can manipulate their reflection and parts of their body to contort, stretch and deconstruct. The artist notes that the work acts as a visual metaphor – the present constantly replacing the past – which inexorably terminates in white light. A white noise audio component encourages the performative aspects of the piece and can also be manipulated by the viewer. Sound is modulated according to the vertical location of the viewer’s body, and intensity is correlated to the physical involvement of the body, much like a theremin.

Image

Biography

Through technological experimentation, Rondeau’s research explores the boundaries of photographic portrait practices and his interest in the dematerialization of the photographic image. His works question instantaneity and flatness by engaging senses such as the proprioception, stereopsis and the subjective perception of time in the decryption of the image. Many of his interactive installations (re)consider the mirror; providing distorted records of appearance that seek to question the relationship between viewer and artwork. Rondeau teaches at the School of Digital Arts, Animation and Design of UQAC. HIs practice stems from his years working in the area of visual post-production in Montreal.

Credits

Louis-Philippe Rondeau

Liminal

2018

2 projectors, slit-scanner, interactive hoop

Liminal

2018

2 projectors, slit-scanner, interactive hoop

Daniel Rozin

PomPom Mirror (2015)

The work relies on motion sensors and 928 faux fur pom poms manipulated by 464 motors to create a mirror reflection of the viewer in real-time, generating moving silhouettes in response to movement. The work leverages a Kinect motion capture device to control 464 servos that, in turn, flip 928 black and white faux fur pom poms back and forth to match your movements in real-time. When the viewer moves within range, a sensor registers the motion and sends the data to a computer, which uses a bespoke algorithm to move motors attached to each pair of black and beige pom poms. The motors work to push and pull pairs of tufts back and forth, creating moving silhouettes that capture the viewer in action. The sound is like a hive of bees, growing louder the closer the viewer is to the work. The viewer’s silhouette shows up as black pom poms; beige pom moms are hidden out of view while black pom poms are pushed to the fore. The work is an extension of Rozin’s longstanding interest in exploring and mimicking the effects of mirrors.

Daniel Rozin

“Ghostly traces fade and emerge, as the motorised composition hums in unified movement, seemingly alive and breathing as a body of its own. A lot of my pieces use light and shadow in order to create pixels, My pieces are very boring when there's not a person in front of them, But the minute a person stands in front, it takes your image. I try to think that maybe it takes more than your image; maybe it's capturing something about your soul and displaying it back to you.”

Daniel Rozin

“Ghostly traces fade and emerge, as the motorised composition hums in unified movement, seemingly alive and breathing as a body of its own. A lot of my pieces use light and shadow in order to create pixels, My pieces are very boring when there's not a person in front of them, But the minute a person stands in front, it takes your image. I try to think that maybe it takes more than your image; maybe it's capturing something about your soul and displaying it back to you.”

Image

Biography

Rozin is an Israeli-American artist and a veteran and pioneer in the field of interactive art. Currently the Resident Artist and an Associate Art Professor at ITP (the Interactive Telecommunications Program) at NYU’s Tisch School of the Arts, he’s been working on mechanical mirrors for almost two decades. Among other honours he has been awarded the prestigious Chrysler Design Award that celebrates the achievements of individuals in innovative works of architecture, art and design which have significantly influenced modern American culture.

As an interactive artist Rozin creates installations and sculptures that have the unique ability to change and respond to the presence and point of view of the viewer. In many cases the viewer becomes the contents of the piece and in others the viewer is invited to take an active role in the creation of the piece. He studied industrial design at the Bezalel Academy in Jerusalem and has shown worldwide.

Danny Rozin:

“I do everything for my pieces by myself. I program them, I design them – no one else has ever touched one of my pieces. I don’t have interns, I don’t have assistants. I want to make art, I don’t want to produce art"

Danny Rozin:

“I do everything for my pieces by myself. I program them, I design them – no one else has ever touched one of my pieces. I don’t have interns, I don’t have assistants. I want to make art, I don’t want to produce art"

Credits

Daniel Rozin

"PomPom Mirror"

2015

928 faux fur pom poms, 464 motors, control electronics, Kinect camera, custom software, microcontroller, wooden armature

48 x 48 x 18 in

121.9 x 121.9 x 45.7 cm.

"PomPom Mirror"

2015

928 faux fur pom poms, 464 motors, control electronics, Kinect camera, custom software, microcontroller, wooden armature

48 x 48 x 18 in

121.9 x 121.9 x 45.7 cm.

Shinseungback Kimyonghun

Nonfacial Mirror (2013)

You must distort or cover your face or some part of it to encounter your face. Different marketing and surveillance technologies try to capture our face because faces provide valuable information about our emotions and identities. But this mirror is different. It avoids faces. You can look at your face in the mirror only when it is a “nonface”. ShinSeungBack.

“With technology we humans become the creator. We create the world ourselves. The work is getting digitalized and so are the humans. Our children who will live in the 22nd century might as well be called human but they will be much different from us. This mirror turns away when it sees a face, so in order to see your face in it you have to make your face distorted or you have to cover some part of it to encounter your face."

“With technology we humans become the creator. We create the world ourselves. The work is getting digitalized and so are the humans. Our children who will live in the 22nd century might as well be called human but they will be much different from us. This mirror turns away when it sees a face, so in order to see your face in it you have to make your face distorted or you have to cover some part of it to encounter your face."

Image

Biography

Shinseungback Kimyonghun is a Seoul-based artistic duo consisting of Shin Seung Back and Kim Yong Hun. Shin Seung Back studied Computer Science and Kim Yong Hun completed studies in Visual Arts. They met while studying at the Graduate School of Cultural Technology at KAIST. After completing a Masters in Science and Engineering and doing experimental works, they started to work together as Shinseungback Kimyonghun. Through a background in computer science and visual arts, their collaborative practice explores the impact of technology on humanity. In order to understand the impact of digital life, they felt the need to understand technology and humanity in broad ways, and thus a collaboration would be meaningful. Seung Back is an engineer interested in creative works and Yong Hun an artist with an interest in exploring technology, Seung Back is interested in the materiality of the computer: Yong Hun thinks about human nature.

Credits

Nonfacial Mirror

2013

A facial mirror, webcam, servomotor, Arduino, computer, face detection algorithm, custom software and wooden pedestal

30x30x135cm

2013

A facial mirror, webcam, servomotor, Arduino, computer, face detection algorithm, custom software and wooden pedestal

30x30x135cm

Klaus Obermaier / Stefano D’Alessio & Martina Menegon

ego (2018)

An installation that reverses and deforms the mirror image through movement of the viewer.

Performance, psychoanalysis and technology come together to produce a humorous, interactive and performative work. The theory behind the work is base on Lacanian theory. The psychology the mirror stage describes the formation of the Ego via the process of objectification, the Ego being the result of a conflict between one's perceived visual appearance and one's emotional experience. This identification is what psychoanalyst Jacques Lacan called alienation. The interactive installation EGO re-stages and reverses the process of alienation by enhancing and deforming the mirror image by the movements of the users. Although an abstraction, it quickly becomes the self and reestablishes the tension between the real and the symbolic, the Ego and the It, the subject and the object.

Image

Biography

Klaus Obermaier

Klaus Obermaier has presented innovative multimedia performances and art works in festivals and theatres throughout Europe, Asia, North and South America and Australia. Obermaeir is interested in contributing to the development of new forms of artistic interaction between humans and digital systems. He s a visiting professor at IUAV University in Venice, Italy and University Babes-Bolyai de Cluj-Napoca in Romania, where he teaches interactive art and performance. He has previously lectured on composition at Webster University in Vienna and taught choreography and new media at the Accademia Nazionale di Danza di Roma. From 2016 to 2018 he was co-director of the Master for Advanced Interaction at the IAAC (Institute for Advanced Architecture of Catalonia) in Barcelona, Spain. He has given lectures at numerous international colloquia and institutions. Klaus Obermaier divides his time between Vienna and Barcelona.

Stefano D’Allesio + Martina Menegon

Martina Menegon and Stefano D’Alessio are two Italian artists with backgrounds in Visual and Performing Arts from the IUAV University of Venice, and in Transmedial Art at the Universität für Angewandte Kunst in Vienna. Since 2010 they have collaborated with Klaus Obermaier. As programmers and teaching assistants at IUAV University, they teach the use of maxMSP/Jitter software and its applications in the artistic and performative field. Their interest in interactive installations and performances investigate the relationship between the biological body and its digital transposition. Their artistic interest relates to body digitisation, distortion of the senses and interaction between body and technology. They have performed and exhibited throughout Europe.

Klaus Obermaier has presented innovative multimedia performances and art works in festivals and theatres throughout Europe, Asia, North and South America and Australia. Obermaeir is interested in contributing to the development of new forms of artistic interaction between humans and digital systems. He s a visiting professor at IUAV University in Venice, Italy and University Babes-Bolyai de Cluj-Napoca in Romania, where he teaches interactive art and performance. He has previously lectured on composition at Webster University in Vienna and taught choreography and new media at the Accademia Nazionale di Danza di Roma. From 2016 to 2018 he was co-director of the Master for Advanced Interaction at the IAAC (Institute for Advanced Architecture of Catalonia) in Barcelona, Spain. He has given lectures at numerous international colloquia and institutions. Klaus Obermaier divides his time between Vienna and Barcelona.

Stefano D’Allesio + Martina Menegon

Martina Menegon and Stefano D’Alessio are two Italian artists with backgrounds in Visual and Performing Arts from the IUAV University of Venice, and in Transmedial Art at the Universität für Angewandte Kunst in Vienna. Since 2010 they have collaborated with Klaus Obermaier. As programmers and teaching assistants at IUAV University, they teach the use of maxMSP/Jitter software and its applications in the artistic and performative field. Their interest in interactive installations and performances investigate the relationship between the biological body and its digital transposition. Their artistic interest relates to body digitisation, distortion of the senses and interaction between body and technology. They have performed and exhibited throughout Europe.

Credits

Klaus Obermaier / Stefano D’Alessio & Martina Menegon

EGO

2015

projector, kinect camera, motion tracking technology + algorithms

EGO

2015

projector, kinect camera, motion tracking technology + algorithms